Published by: Anu Poudeli

Published date: 04 Jul 2023

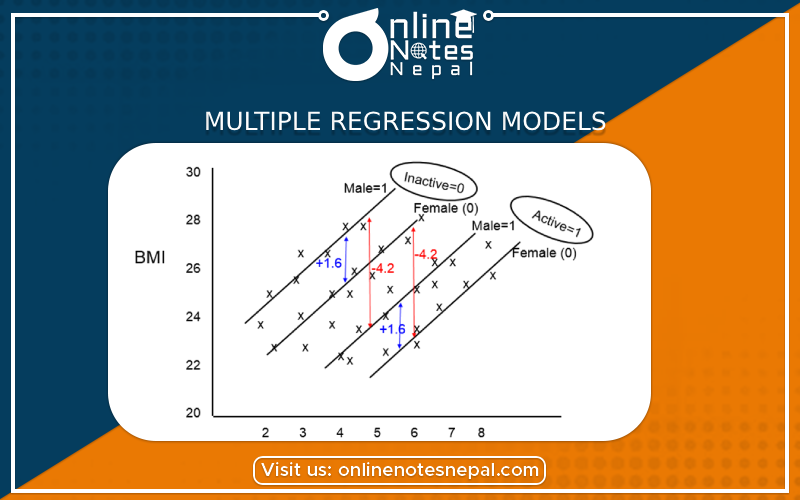

Multiple regression models are a type of statistical analysis used to predict or explain the relationship between a dependent variable and two or more independent variables. In these models, the dependent variable is the outcome or response variable, while the independent variables are the predictors or explanatory variables. The goal is to find the best-fitting line or plane through the data points, which minimizes the difference between the predicted values and the actual values of the dependent variable.

Key concepts and components of multiple regression models include:

1.Equations : Y = 0 + 1X1 + 2X2 +... + n*Xn + where Y is the dependent variable, Xi represents the independent variables, 0 is the intercept (constant), 1 to n are the regression coefficients, and is the error term representing the unexplained variation.

2.Coefficients (): Assuming that all other independent variables remain constant, each coefficient () indicates the change in the dependent variable (Y) for a one-unit change in the corresponding independent variable (Xi).

3.Intercept (B0): When all independent variables are equal to zero, the dependent variable's value is represented by the intercept (0). The intercept might not always have a useful interpretation.

4. Assumptions : Multiple regression models rely on a number of assumptions, including linearity (the connection between the dependent and independent variables is linear), independence, and the independence of the independent and dependent variables.

5. Multicollinearity : To take into account other evaluation metrics such modified R-squared, AIC, or BIC.Multicollinearity is the term used to describe the highly interrelated nature of two or more independent variables, which results in unstable estimations of regression coefficients. To prevent inaccurate results, multicollinearity must be recognized and addressed.

6.Model Fit squared (R2) is a commonly used metric to assess how well the regression model fits the data. It shows how much of the variance in the dependent variable can be attributed to the independent factors. R2 might not, however, give a whole picture of model performance, and it is important

7.Tests of Hypothesis: Tests of hypotheses can be used to assess whether specific regression coefficients are significantly different from zero, which would indicate whether the relevant independent variables have a statistically significant effect on the dependent variable.

8.Residuals: Residuals are the discrepancies between the dependent variable's actual values and those predicted by the regression model. The model's assumptions can be verified and any issues can be found by analyzing residuals.

9.Outliers and important points: Outliers and important points can significantly affect the outcomes of a regression analysis. Investigating and handling these extreme facts effectively is crucial since they may have an impact on the model fit.